This topic describes common Linux kernel network parameters of Elastic Compute Service (ECS) instances. This topic also provides answers to frequently asked questions about the Linux kernel network parameters.

Considerations

Before you modify kernel parameters, take note of the following items:

We recommend that you modify kernel parameters based only on your business requirements and relevant data.

Before you modify kernel parameters, you must understand the purpose of each parameter. The purpose of a kernel parameter varies based on the environment type or version.

Back up important data of an ECS instance. For more information, see Create a snapshot for a disk.

Query and modify kernel parameters of a Linux instance

You can use /proc/sys/ or /etc/sysctl.conf to modify kernel parameters during the instance runtime. The following section describes the differences between the two tools:

/proc/sys/is a virtual file system that can be used to access kernel parameters. Thenetdirectory in the virtual file system stores all network kernel parameters that take effect in the system. You can modify the parameters during the instance runtime. The modification becomes invalid after the instance is restarted. The virtual file system is used to temporarily verify the modification./etc/sysctl.confis a configuration file. You can modify the default values of kernel parameters in the configuration file. The modification remains valid after the instance is restarted.

Files in the /proc/sys/ directory correspond to the full names of parameters in the /etc/sysctl.conf configuration file. For example, the /proc/sys/net/ipv4/tcp_tw_recycle file corresponds to the net.ipv4.tcp_tw_recycle parameter in the configuration file. The file content is the parameter value.

In Linux kernel version 4.12 and later, the net.ipv4.tcp_tw_recycle parameter is removed from the sysctl.conf file. The net.ipv4.tcp_tw_recycle parameter can be configured only in kernel versions earlier than 4.12.

View and modify kernel parameters by using the file in the /proc/sys/ directory

Log on to the ECS instance that runs a Linux operating system.

For more information, see Connection method overview.

Run the

catcommand to view the content of the configuration file.For example, to view the value of the

net.ipv4.tcp_tw_recycleparameter, run the following command:cat /proc/sys/net/ipv4/tcp_tw_recycleRun the

echocommand to modify the file that contains the kernel parameter.For example, to change the value of the

net.ipv4.tcp_tw_recycleparameter to 0, run the following command:echo "0" > /proc/sys/net/ipv4/tcp_tw_recycle

View and modify kernel parameters of the /etc/sysctl.conf configuration file

Log on to the ECS instance that runs a Linux operating system.

For more information, see Connection method overview.

Run the following command to view all valid parameters in the current system:

sysctl -aThe following sample command output shows specific kernel parameters:

net.ipv4.tcp_app_win = 31 net.ipv4.tcp_adv_win_scale = 2 net.ipv4.tcp_tw_reuse = 0 net.ipv4.tcp_frto = 2 net.ipv4.tcp_frto_response = 0 net.ipv4.tcp_low_latency = 0 net.ipv4.tcp_no_metrics_save = 0 net.ipv4.tcp_moderate_rcvbuf = 1 net.ipv4.tcp_tso_win_divisor = 3 net.ipv4.tcp_congestion_control = cubic net.ipv4.tcp_abc = 0 net.ipv4.tcp_mtu_probing = 0 net.ipv4.tcp_base_mss = 512 net.ipv4.tcp_workaround_signed_windows = 0 net.ipv4.tcp_challenge_ack_limit = 1000 net.ipv4.tcp_limit_output_bytes = 262144 net.ipv4.tcp_dma_copybreak = 4096 net.ipv4.tcp_slow_start_after_idle = 1 net.ipv4.cipso_cache_enable = 1 net.ipv4.cipso_cache_bucket_size = 10 net.ipv4.cipso_rbm_optfmt = 0 net.ipv4.cipso_rbm_strictvalid = 1Modify the kernel parameters.

Run the following command to temporarily modify a kernel parameter:

/sbin/sysctl -w kernel.parameter="[$Example]"NoteReplace kernel.parameter with a kernel parameter name and [$Example] with a specific value based on your business requirements. For example, run the

sysctl -w net.ipv4.tcp_tw_recycle="0"command to change the value of thenet.ipv4.tcp_tw_recycleparameter to 0.Permanently modify kernel parameters.

Run the following command to open the

/etc/sysctl.confconfiguration file:vim /etc/sysctl.confPress the

Ikey to enter the Insert mode.Modify kernel parameters as needed.

For example, make the following modifications to the file:

net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1Press the

Esckey and enter:wqto save and close the file.Run the following command for the configurations to take effect:

/sbin/sysctl -p

FAQ about the common kernel network parameters of Linux ECS instances

Why does a "Time wait bucket table overflow" error message appear in the /var/log/messages log?

Why does a Linux ECS instance have a large number of TCP connections in the FIN_WAIT2 state?

Why does a Linux ECS instance have a large number of TCP connections in the CLOSE_WAIT state?

What do I do if I cannot connect to a Linux ECS instance and the "nf_conntrack: table full, drop packet" error message appears in the /var/log/message log?

Problem description

You cannot connect to a Linux ECS instance. When you ping the instance, ping packets are discarded or the ping fails. The following error message frequently appears in the /var/log/message system log:

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.Cause

ip_conntrack is a NAT module in the Linux operating system and tracks connection entries. The ip_conntrack module uses a hash table to record established connection entries of TCP. When the hash table is full, the packets for new connections are discarded, and the nf_conntrack: table full, dropping packet error message appears.

The Linux operating system allocates a specific memory space to maintain each TCP connection. The space size is related to the nf_conntrack_buckets and nf_conntrack_max parameters. The default nf_conntrack_max value is four times as large as the default nf_conntrack_buckets value. We recommend that you increase the value of the nf_conntrack_max parameter.

Maintaining connections consumes memory. When the system is idle and the memory is sufficient, we recommend that you increase the value of the nf_conntrack_max parameter.

Solution

Use Virtual Network Computing (VNC) to connect to the instance.

For more information, see Connect to an instance by using VNC.

Change the value of the

nf_conntrack_maxparameter.Run the following command to open the

/etc/sysctl.conffile:vi /etc/sysctl.confPress the

Ikey to enter the Insert mode.Change the value of the

nf_conntrack_maxparameter.Example:

655350.net.netfilter.nf_conntrack_max = 655350Press the

Esckey and enter:wqto save and close the file.

Change the value of the

nf_conntrack_tcp_timeout_establishedparameter.Default value: 432000. Unit: seconds. Example: 1200.

net.netfilter.nf_conntrack_tcp_timeout_established = 1200Run the following command for the configurations to take effect:

sysctl -p

Why does a "Time wait bucket table overflow" error message appear in the /var/log/messages log?

Problem description

The "kernel: TCP: time wait bucket table overflow" error message frequently appears in the /var/log/messages logs of a Linux ECS instance.

Feb 18 12:28:38 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:44 i-*** kernel: printk: 227 messages suppressed.

Feb 18 12:28:44 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:52 i-*** kernel: printk: 121 messages suppressed.

Feb 18 12:28:52 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:53 i-*** kernel: printk: 351 messages suppressed.

Feb 18 12:28:53 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:59 i-*** kernel: printk: 319 messages suppressed.Cause

The net.ipv4.tcp_max_tw_buckets parameter is used to specify the maximum number of allowed connections in the TIME_WAIT state in the kernel. When the total number of connections that are in and are about to transition to the TIME_WAIT state on the ECS instance exceeds the net.ipv4.tcp_max_tw_buckets value, the "kernel: TCP: time wait bucket table overflow" error message appears in the /var/log/messages log. Then, the kernel terminates excess TCP connections.

Solution

You can increase the net.ipv4.tcp_max_tw_buckets value based on your business requirements. We recommend that you optimize the creation and maintenance of TCP connections based on your business requirements. The following section describes how to change the value of the net.ipv4.tcp_max_tw_buckets parameter.

Use VNC to connect to the instance.

For more information, see Connect to an instance by using VNC.

Run the following command to query the number of existing TCP connections:

netstat -antp | awk 'NR>2 {print $6}' | sort | uniq -cThe following command output indicates that 6,300 connections are in the TIME_WAIT state:

6300 TIME_WAIT 40 LISTEN 20 ESTABLISHED 20 CONNECTEDRun the following command to view the value of the

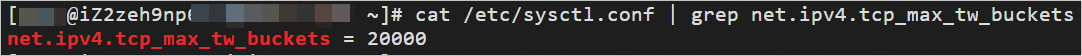

net.ipv4.tcp_max_tw_bucketsparameter:cat /etc/sysctl.conf | grep net.ipv4.tcp_max_tw_bucketsThe command output shown in following figure indicates that the value of the

net.ipv4.tcp_max_tw_bucketsparameter is 20000.

Change the value of the

net.ipv4.tcp_max_tw_bucketsparameter.Run the following command to open the

/etc/sysctl.conffile:vi /etc/sysctl.confPress the

Ikey to enter the Insert mode.Change the value of the

net.ipv4.tcp_max_tw_bucketsparameter.Example:

65535.net.ipv4.tcp_max_tw_buckets = 65535Press the

Esckey and enter:wqto save and close the file.

Run the following command for the configurations to take effect:

sysctl -p

Why does a Linux ECS instance have a large number of TCP connections in the FIN_WAIT2 state?

Problem description

A large number of TCP connections on the Linux ECS instance are in the FIN_WAIT2 state.

Cause

This issue may occur because of the following reasons:

In the HTTP service, a server proactively terminates a connection for a specific reason. For example, if a response to a keepalive message times out, the server terminates the connection, and the connection enters the FIN_WAIT2 state.

The TCP/IP protocol stack supports half-open connections. Different from the TIME_WAIT state, the FIN_WAIT2 state does not mean that a connection timed out. If the client does not terminate the connection, the connection remains in the FIN_WAIT2 state until the system restarts. The increasing number of connections in the FIN_WAIT2 state causes the kernel to crash.

Solution

You can decrease the value of net.ipv4.tcp_fin_timeout parameter to accelerate the termination of TCP connections in the FIN_WAIT2 state.

Use VNC to connect to the instance.

For more information, see Connect to an instance by using VNC.

Change the value of the

net.ipv4.tcp_fin_timeoutparameter.Run the following command to open the

/etc/sysctl.conffile:vi /etc/sysctl.confPress the

Ikey to enter the Insert mode.Change the value of the

net.ipv4.tcp_fin_timeoutparameter.Example: 10.

net.ipv4.tcp_fin_timeout = 10Press the

Esckey and enter:wqto save and close the file.

Run the following command for the configurations to take effect:

sysctl -p

Why does a Linux ECS instance have a large number of TCP connections in the CLOSE_WAIT state?

Problem description

A large number of TCP connections on the Linux ECS instance are in the CLOSE_WAIT state.

Cause

The issue may occur because the number of TCP connections in the CLOSE_WAIT state is out of range.

TCP uses a four-way handshake to terminate a connection. Both ends of a TCP connection can initiate a request to terminate the connection. If the peer terminates the connection but the local end does not, the connection enters the CLOSE_WAIT state. The local end cannot communicate with the peer over this half-open connection and needs to terminate the connection at the earliest opportunity.

Solution

We recommend that you verify that a connection is terminated by the peer in the program.

Connect to the ECS instance.

For more information, see Connection method overview.

Check and terminate TCP connections in the CLOSE_WAIT state in the program.

The read and write functions in a programming language can be used to monitor TCP connections in the CLOSE_WAIT state. You can use one of the following methods to terminate connections in Java or C language:

Java language

Use the

readmethod to check the end of a file based on the input and output streams. If the return value is-1, the end of the file has been reached.Use the

closemethod to terminate a connection.

C language

Check the return value of the

readfunction.If the return value is 0, terminate the connection.

If the return value is less than 0, view the error message. If AGAIN is not displayed, terminate the connection.

Why am I unable to access an ECS instance or an ApsaraDB RDS instance after I configure NAT for my client?

Problem description

After NAT is configured, the client cannot access ECS or RDS instances on the server side, including ECS instances in VPCs with source NAT (SNAT) enabled.

Cause

This issue may occur because the values of the net.ipv4.tcp_tw_recycle and net.ipv4.tcp_timestamps parameters on the server side are set to 1.

If the values of the net.ipv4.tcp_tw_recycle and net.ipv4.tcp_timestamps parameters on the server side are set to 1, the server checks the timestamp in each TCP connection packet. If timestamps are not received in ascending order, the server does not respond.

Solution

You can select an appropriate solution to the connection failures based on cloud products deployed on the server side.

If an ECS instance is deployed as a remote server, set the

net.ipv4.tcp_tw_recycleandnet.ipv4.tcp_timestampsparameters to 0.If an ApsaraDB RDS instance is deployed as a remote server, you cannot modify the kernel parameters on the server. Instead, you can change the

net.ipv4.tcp_tw_recycleandnet.ipv4.tcp_timestampsparameters to 0 on the client.

Use VNC to connect to the instance.

For more information, see Connect to an instance by using VNC.

Change the values of the

net.ipv4.tcp_tw_recycleandnet.ipv4.tcp_timestampsparameters to 0.Run the following command to open the

/etc/sysctl.conffile:vi /etc/sysctl.confPress the

Ikey to enter the Insert mode.Change the values of the

net.ipv4.tcp_tw_recycleandnet.ipv4.tcp_timestampsparameters to 0.net.ipv4.tcp_tw_recycle=0 net.ipv4.tcp_timestamps=0Press the

Esckey and enter:wqto save and close the file.

Run the following command for the configurations to take effect:

sysctl -p

Common Linux kernel parameters

Parameter | Description |

net.core.rmem_default | The default size of the socket receive window. Unit: byte. |

net.core.rmem_max | The maximum size of the socket receive window. Unit: byte. |

net.core.wmem_default | The default size of the socket send window. Unit: byte. |

net.core.wmem_max | The maximum size of the socket send window. Unit: byte. |

net.core.netdev_max_backlog | When the kernel processing speed is slower than the network interface controller (NIC) receive speed, excess packets are stored in the receive queue of the NIC. This parameter specifies the maximum number of packets allowed to be sent to a queue in the preceding scenario. |

net.core.somaxconn | This global parameter specifies the maximum length of a listening queue of each port. The |

net.core.optmem_max | The maximum buffer size of each socket. |

net.ipv4.tcp_mem | This parameter reflects the memory usage of the TCP stack. The unit is memory page that is 4 KB in most cases.

|

net.ipv4.tcp_rmem | The receive buffer size. This parameter specifies the size of memory used by the socket for auto configuration.

|

net.ipv4.tcp_wmem | The send buffer size. This parameter specifies the size of memory used by the socket for auto configuration.

|

net.ipv4.tcp_keepalive_time | The interval at which TCP sends keepalive messages to check whether a TCP connection is valid. Unit: seconds. |

net.ipv4.tcp_keepalive_intvl | The interval at which TCP resends a keepalive message if no response is returned. Units: seconds. |

net.ipv4.tcp_keepalive_probes | The maximum number of keepalive messages that can be sent before a TCP connection is considered invalid. |

net.ipv4.tcp_sack | This parameter specifies whether to enable TCP selective acknowledgment (SACK). A value of 1 indicates that TCP SACK is enabled. The TCP SACK feature allows the server to send only the missing packets, which improves performance. We recommend that you enable this feature for wide area network (WAN) communications. Take note that this feature causes CPU utilization to increase. |

net.ipv4.tcp_timestamps | The TCP timestamp, which is 12 bytes in size and carried in the TCP header. The timestamp is used to trigger the calculation of the round-trip time (RTT) in a more accurate manner than the retransmission timeout method (RFC 1323). To improve performance, we recommend that you enable this option. |

net.ipv4.tcp_window_scaling | This parameter specifies whether to enable window scaling that is defined in RFC 1323. To allow the system to use a TCP window larger than 64 KB, set the value to 1 to enable window scaling. The maximum TCP window size is 1 GB. This parameter takes effect only when window scaling is enabled for both ends of a TCP connection. |

net.ipv4.tcp_syncookies | This parameter specifies whether to enable TCP SYN cookie (

|

net.ipv4.tcp_tw_reuse | This parameter specifies whether a TIME-WAIT socket (TIME-WAIT port) can be used to establish TCP connections. |

net.ipv4.tcp_tw_recycle | This parameter specifies whether the system recycles TIME-WAIT sockets at the earliest opportunity. |

net.ipv4.tcp_fin_timeout | The time period within which a TCP connection remains in the FIN-WAIT-2 state after the local end disconnects a socket connection. Unit: seconds. During this period of time, the peer may become disconnected, never terminate the connection, or encounter an unexpected process termination. |

net.ipv4.ip_local_port_range | The local TCP/UDP protocol port numbers. |

net.ipv4.tcp_max_syn_backlog | The number of TCP connections that are in the In the |

net.ipv4.tcp_westwood | This parameter enables the congestion control algorithm on the client. The congestion control algorithm maintains an evaluation of throughput and attempts to optimize the overall bandwidth usage. We recommend that you enable the preceding algorithm for WAN communications. |

net.ipv4.tcp_bic | This parameter specifies whether binary increase congestion control is enabled for long-distance networks to better use gigabyte connections. We recommend that you enable this feature for WAN communications. |

net.ipv4.tcp_max_tw_buckets | The maximum number of allowed connections in the TIME_WAIT state. If the number of connections in the TIME_WAIT state is greater than the default value, the connections are immediately terminated. The default value varies based on the instance memory size. The maximum default value is 262144. |

net.ipv4.tcp_synack_retries | The number of times that a SYN-ACK packet is retransmitted when a connection is in the SYN_RECV state. |

net.ipv4.tcp_abort_on_overflow | A value of 1 enables the system to send RST packets to terminate connections if the system receives a large number of requests within a short period of time and the relevant applications fail to process the requests. We recommend that you improve processing capabilities by optimizing processing efficiency of applications. Default value: 0. |

net.ipv4.route.max_size | The maximum number of routes allowed by the kernel. |

net.ipv4.ip_forward | This parameter specifies whether the IPv4 packet forwarding feature is enabled. |

net.ipv4.ip_default_ttl | The maximum number of hops through which a packet can pass. |

net.netfilter.nf_conntrack_tcp_timeout_established | If no packets are transmitted over the established connection within a specific period of time, the system uses iptables to terminate the connection. |

net.netfilter.nf_conntrack_max | The maximum hash value that specifies the number of connections that can be tracked. |