このページでは、E-MapReduce クラスター上で Pig ジョブを作成し実行する方法について説明します。

Pig で OSS を使用

OSS パスには以下の形式を使用します。

oss://${AccessKeyId}:${AccessKeySecret}@${bucket}.${endpoint}/${path}パラメーターの説明 :

-

${accessKeyId} : お客様のアカウントの AccessKey ID

-

${accessKeySecret} : AccessKey ID に対応する AccessKey Secret

-

${bucket} : AccessKey ID に対応するバケット

-

${endpoint} : OSS へのアクセスに使用するネットワーク。 クラスターが存在するリージョンによって異なり、対応する OSS もクラスターが存在するリージョンにある必要があります。

特定の値に関する詳細は、 「OSS エンドポイント (OSS endpoint)」をご参照ください。

-

${path} : バケット内のパス

Pig の script1-hadoop.pig を例に取り上げます。 Pig 内の tutorial.jar および excite.log.bz2 を OSS にアップロードします。 ファイルをアップロードする URL がそれぞれ oss://emr/jars/tutorial.jar および oss://emr/data/excite.log.bz2 であるとします。

以下のステップを実行します。

- スクリプトの作成

以下のとおり、JAR ファイルのパスおよびスクリプト内の 入力パスと出力パスを編集します。OSS パスの形式が oss://${accesskeyId}:${accessKeySecret}@${bucket}.${endpoint}/object/path であることにご注意ください。

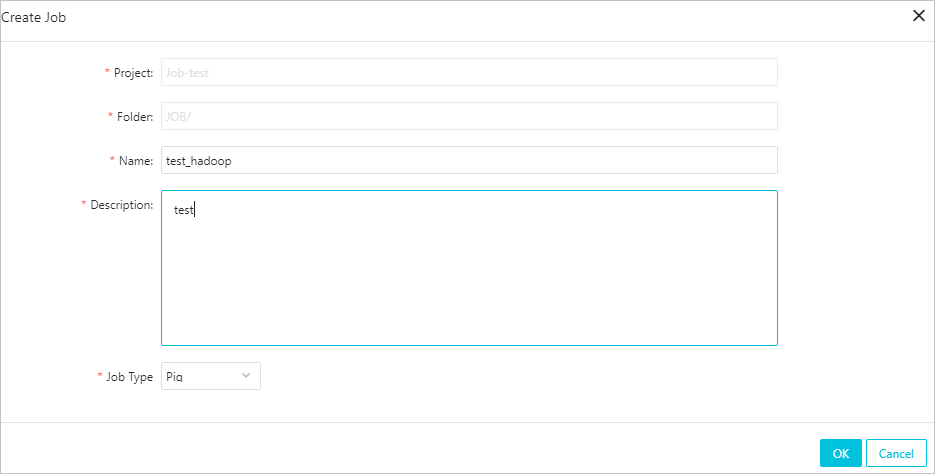

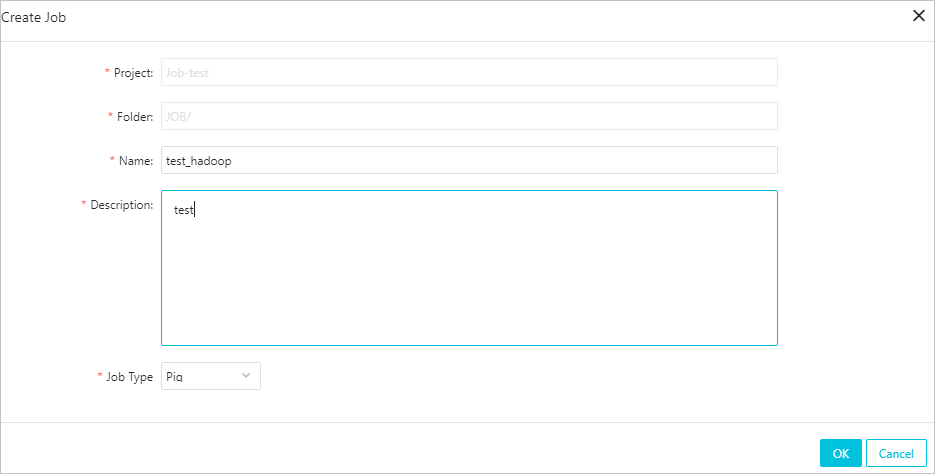

/* * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. NOTICE ファイルの表示 * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ -- Query Phrase Popularity (Hadoop cluster) -- This script processes a search query log file from the Excite search engine and finds search phrases that occur with particular high frequency during certain times of the day. -- Register the tutorial JAR file so that the included UDFs can be called in the script. REGISTER oss://${AccessKeyId}:${AccessKeySecret}@${bucket}.${endpoint}/data/tutorial.jar; -- Use the PigStorage function to load the excite log file into the ▒raw▒ bag as an array of records. -- Input: (user,time,query) raw = LOAD 'oss://${AccessKeyId}:${AccessKeySecret}@${bucket}.${endpoint}/data/excite.log.bz2' USING PigStorage('\t') AS (user, time, query); -- Call the NonURLDetector UDF to remove records if the query field is empty or a URL. clean1 = FILTER raw BY org.apache.pig.tutorial.NonURLDetector(query); -- Call the ToLower UDF to change the query field to lowercase. clean2 = FOREACH clean1 GENERATE user, time, org.apache.pig.tutorial.ToLower(query) as query; -- Because the log file only contains queries for a single day, we are only interested in the hour. -- The excite query log timestamp format is YYMMDDHHMMSS. -- Call the ExtractHour UDF to extract the hour (HH) from the time field. houred = FOREACH clean2 GENERATE user, org.apache.pig.tutorial.ExtractHour(time) as hour, query; -- Call the NGramGenerator UDF to compose the n-grams of the query. ngramed1 = FOREACH houred GENERATE user, hour, flatten(org.apache.pig.tutorial.NGramGenerator(query)) as ngram; -- Use the DISTINCT command to get the unique n-grams for all records. ngramed2 = DISTINCT ngramed1; -- Use the GROUP command to group records by n-gram and hour. hour_frequency1 = GROUP ngramed2 BY (ngram, hour); -- Use the COUNT function to get the count (occurrences) of each n-gram. hour_frequency2 = FOREACH hour_frequency1 GENERATE flatten($0), COUNT($1) as count; -- Use the GROUP command to group records by n-gram only. -- Each group now corresponds to a distinct n-gram and has the count for each hour. uniq_frequency1 = GROUP hour_frequency2 BY group::ngram; -- For each group, identify the hour in which this n-gram is used with a particularly high frequency. -- Call the ScoreGenerator UDF to calculate a "popularity" score for the n-gram. uniq_frequency2 = FOREACH uniq_frequency1 GENERATE flatten($0), flatten(org.apache.pig.tutorial.ScoreGenerator($1)); -- Use the FOREACH-GENERATE command to assign names to the fields. uniq_frequency3 = FOREACH uniq_frequency2 GENERATE $1 as hour, $0 as ngram, $2 as score, $3 as count, $4 as mean; -- Use the FILTER command to move all records with a score less than or equal to 2.0. filtered_uniq_frequency = FILTER uniq_frequency3 BY score > 2.0; -- Use the ORDER command to sort the remaining records by hour and score. ordered_uniq_frequency = ORDER filtered_uniq_frequency BY hour, score; -- Use the PigStorage function to store the results. -- Output: (hour, n-gram, score, count, average_counts_among_all_hours) STORE ordered_uniq_frequency INTO 'oss://${AccessKeyId}:${AccessKeySecret}@${bucket}.${endpoint}/data/script1-hadoop-results' USING PigStorage(); - ジョブの作成

ステップ 1 で作成したスクリプトを OSS に格納します。 たとえば、ストレージパスが oss://emr/jars/script1-hadoop.pig の場合は、以下のステップを実行して E-MapReduce にジョブを作成します。

- 実行プランの作成および実行

E-MapReduce に実行プランを作成し、作成した Pig ジョブを実行プランに追加します。 ポリシーとして [今すぐ実行]を選択し、script1-hadoop ジョブを選択したクラスターで実行できるようにします。